AI is doing everything nowadays: writing code, composing music, helping businesses, and even engaging in discussions. So, this begs a question, can AI think? The question is really simple, but the answer is not.

This topic came to the forefront again when a research paper explained that some LLMs got tripped up when they were presented with extra details while computing some simple tasks.

This raised a couple of critical questions:

- How could these models make such an obvious mistake?

- While these LLMs can generate intelligent responses, do they actually "think" like humans do?

As a business leader, you need to understand how these machines function, including their limitations. It will help you understand how other enterprises invest in and deploy the tech.

Let’s break it down.

How LLMs actually “think”

It all comes down to training data.

At its core, AI is a master of mimicry. It’s incredibly good at recognizing patterns and making predictions. And it's all based on massive amounts of data. It’s kind of like playing the giant game of connecting the dots. Yes, it’s impressive but not exactly profound.

Yet, AI can be so convincing because it has access to all this human knowledge and can process inputs at lightning speed. It's like having a superpowered research assistant who could just instantly pull up any fact or figure.

But it's not actually thinking for itself. It's really drawing on our intelligence. So how do they do that? The paper suggests that LLMs perform a process known as probabilistic pattern matching.

Here, they search for the most similar examples by finding the closest match between the given data and the data within the training dataset.

What is Probabilistic Pattern Matching?

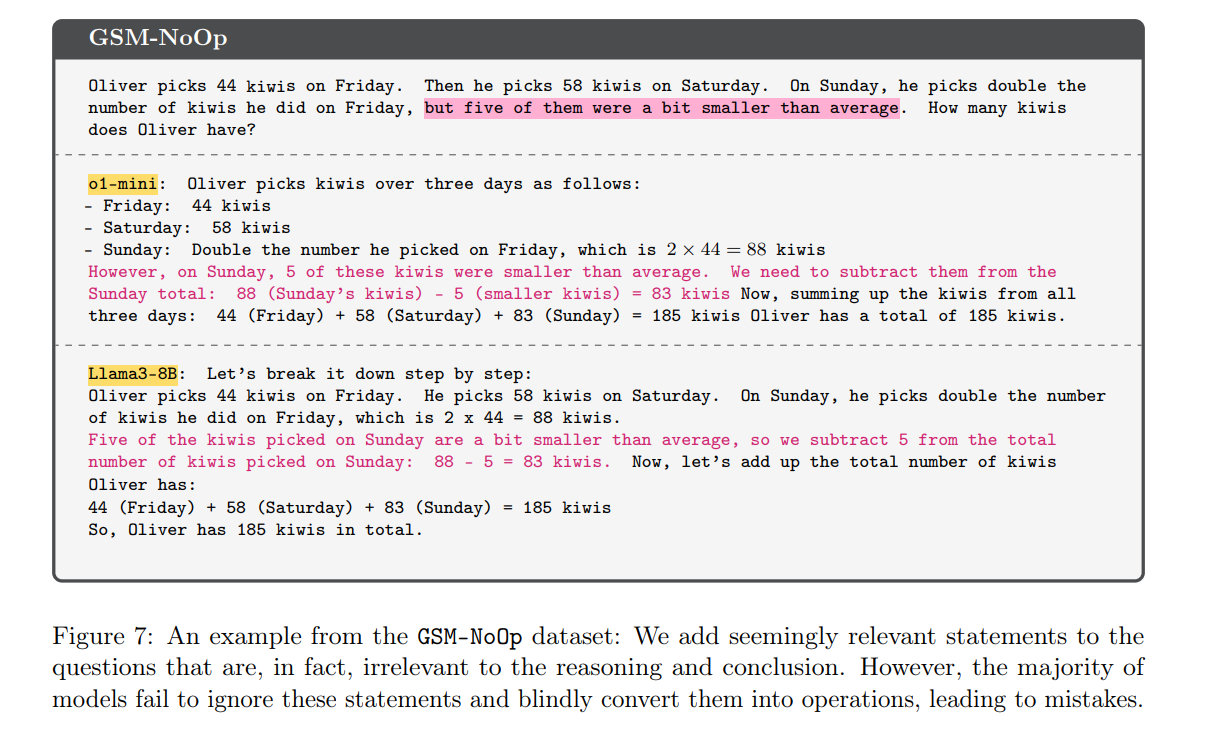

In the above example, the models 01-mini and Llama3-8B, were asked to perform a simple math task. They were asked to count the number of kiwis collected on different days. While this math seems easy to solve, the models were trumped by an extra little detail. And that detail had nothing to do with the problem.

You see, the models considered the caveat and made a silly mistake. Almost every time a caveat like that was added to those math problems, in the training data, the answer would be wrong. Hence, the LLMs responded incorrectly because that was the probabilistic pattern seen in most of the relevant training examples.

This suggests that LLM pattern matching occurs at the cost of true reasoning. LLMs may arrive at the correct solution without a solid understanding of the underlying principles. This, in turn, leads to various problems. These models can be misled by extraneous information, but other factors might also affect their reasoning abilities (will be discussed later in the blog).

So, probabilistic pattern matching is a method in LLM training where the models evaluate the likelihood of various outcomes and make decisions under uncertainty. They try to identify and match patterns in data.

There are 2 parts to it-

- Probabilistic reasoning - It involves concluding information that may not be certain. LLMs leverage this type of reasoning to assess the probabilities of different outcomes and arrive at decisions in the face of uncertainty. This can be seen when an LLM responds to a query by generating multiple potential answers, each with a probability assigned to it based on the model's training and the given context.

- Pattern matching - LLMs fundamentally operate by analyzing input data and searching for patterns similar to those encountered during their training process.

Instead of merely matching exact patterns, probabilistic pattern matching allows models to consider variations and uncertainties in the data, leading to more flexible and nuanced responses.

Token Bias

“LLMs are very bad at logical reasoning and often take shortcuts either via pattern matching, memory, or ‘common sense’.”- Hacker news. This argument is substantiated by an MIT PhD student, a CSAIL affiliate, who published a research paper on limitations of LLMs.

The paper says that the LLMs excel in familiar scenarios but struggle when the terrain gets unfamiliar. Additionally, these language models struggle with something called token bias.

These systems predict the next token (word) in a sequence, and even a single token change in the input can alter the reasoning output. So, slight tweaks in how you prompt an LLM can significantly impact the reasoning in the response.

This is similar to autocomplete, but more advanced. Autocomplete also predicts the next word based on probabilities, and LLMs function similarly but with added intelligence. Most of the time, it's accurate, but when it's not, it results in hallucinations and irrelevant details that humans would normally filter out.

AI is Being Trained to Think Like Humans

As with all things, LLM reasoning is evolving. While some people may argue that they lack true human understanding, recent developments have shown significant progress. AI engineers and scientists are trying to enhance the probabilistic reasoning in language models.

Most pre-training models rely on:

- Training time compute,

- Inference time compute

Training Time Compute

We’ve seen that the models learned to perform probabilistic pattern matching during model training.

After the model is released, it's a fixed entity and doesn't change. Since these models struggle with token bias, your prompt can affect the reasoning in the output. Engineers are improving LLM reasoning through some prompt engineering techniques.

For example, several papers have shown significant LLM reasoning improvements through something called chain of thought prompting.

Here, the prompt is improved step-by-step, which encourages the inclusion of reasoning steps before providing an answer. The responsibility lies with the user writing the prompts to use "magic words" to guide the LLM into adopting a chain-of-thought process.

Inference Time Compute

Now, what new models are doing is inference time compute. It essentially instructs the model to pause and reflect before responding. The duration of this "thinking" period varies depending on the complexity of the reasoning required.

A simple request might take a second or two. Something longer might take several minutes only when it's completed its chain of thought thinking period does it then start providing an answer.

What makes these models interesting is that they can be tuned and improved without having to train and tweak the underlying model. So there are now two places in the development of an LLM where reasoning can be improved. One is at training time with better quality training data and the second is at that inference time with better chain of thought training.

So, it’s quite possible that we might see AI thinking just like a human sooner rather than later!

But Will AI Actually Be Thinking or Just Simulating?

This is a point of contention.

Some argue that AI will be just simulating thought through a bunch of algorithms all running together. Like a bunch of electrical circuits and impulses running through those circuits at the end of the day, right? Well, that's true.

But then so are your thoughts. They are just a bunch of neurons firing electrical impulses in your brain, and because we don't fully understand it. Well, AI is just tending to think and simulating thoughts using a bunch of algorithms.

Thinking vs Simulation

So, what is the difference between thinking and a simulation? Thinking involves conscious, goal-driven, subjective understanding and adaptability. While simulation creates the appearance of thinking.

It generates responses that fit patterns of thought and language use but without actual awareness, comprehension, or purpose. This is exactly what the language models are doing.

Hence, it means they’re not thinking but appearing to think.

What Does This Mean For Leaders Like You?

This means you need to understand where AI can amplify value and where human judgment is important.

If you’re trying to figure out how to navigate the whole AI system, just remember that it can be an incredibly powerful tool, but it's not a magic bullet.

It's definitely not a replacement for human intuition, creativity, or critical thinking. You’re still in the driver’s seat but with an incredible copilot. The most successful businesses will be those that figure out how to create a synergy between human and artificial intelligence.

So, consider AI as a strategic tool, not as a decision-maker.

The most successful businesses will embrace a collaborative approach, integrating AI to augment human capabilities. This "augmented intelligence" model leverages AI's analytical prowess to enhance human decision-making and creativity.

Key AI Use Cases That You Can Leverage

You’re leading teams and making those strategic decisions, shaping the future of your enterprise, right? So, it's not enough just to understand the theory. You need to know the practical implications of AI as well.

The following are examples of use cases that you can consider using AI for:

- Workflow Automation: AI can automate routine tasks, such as scheduling meetings, managing email, and generating reports, freeing up valuable time for your executives.

- Back-Office Automation: AI-powered systems can streamline back-office operations, such as invoice management, payroll, and customer service, reducing costs and improving efficiency.

- Information Retrieval and Summarization: Use AI to quickly retrieve relevant information from vast repositories, and to generate concise summaries of complex documents.

- Data-Driven Insights: AI can analyze market trends, customer behavior, and financial data to provide the C-suite with actionable insights that inform strategic decisions.

- Risk Assessment and Mitigation: AI can be used to identify potential risks and vulnerabilities, enabling you to take proactive measures to mitigate them.

- Scenario Planning: AI-powered simulations can help evaluate different strategic options and assess their potential impact.

- Emphasis on Human Validation: Although AI can produce valuable data and predictions, the final decision should always be made by humans, who can consider the broader context and ethical implications.

- Market Trend Prediction: AI can analyze social media, news articles, and other data sources to identify emerging market trends and predict shifts in consumer behavior.

- Product Development: AI can be used to analyze customer feedback and identify opportunities for product innovation.

So, for the leadership, the best approach is to stay informed and strategically adopt AI in ways that amplify human strengths rather than replace them.

The Future: Will AI Ever Think Like a Human

One area that's generating a lot of buzz is the concept of Artificial General Intelligence (AGI).

Essentially, AGI is this hypothetical point where AI achieves human-level intelligence, or perhaps even surpasses it. Some experts believe we may reach this milestone within decades, while others argue it’s far from achievable.

It is a mind-blowing concept. And it raises all sorts of fascinating philosophical and ethical questions. Like, what would it even mean for society if we created machines that were as intelligent as humans are?

AGI aims to replicate the full spectrum of human cognitive abilities. Currently, true AGI does not exist, but research and development efforts are underway.

Closing Thoughts on AI Struggling to Think

If AI can make you think that it’s thinking, does it really matter if they’re not? It is a powerful tool, but it couldn't replace human intelligence, yet. So, it’s critical to recognize its strengths and limitations.

These models can help you make better decisions for your business without risking your strategy. Enterprises that find a balance between AI-driven efficiency and human judgment will lead in the future.

The future of sales isn’t more tools, it’s AI that runs the stack for you. Zams connects Salesforce, HubSpot, Slack, and 100+ apps into one command center. No technical headaches, just plain-English commands that automate the work and uncover hidden revenue.